I’ve been a member of the popular community discussion network Nextdoor for many years, and about a year ago was asked to be a volunteer Review Team member for my area. I accepted and have been helping with the moderation process since. It’s clear from comments that people don’t know how content moderation works on Nextdoor, so here’s how my side of things works…

You don’t have to be an investor in the chaos that is X/Twitter to know that one of the greatest challenges with any social media, whether user comments on a newspaper article or a potentially racist or sexist Instagram post, to know that community standards and content moderation are really difficult to implement. Do we let everyone post anything they want, however crude, violent, or hateful?

Even the venerable First Amendment of the US Constitution has limits, and, of course, every community has its generally accepted limitations too. You don’t, for example, talk with your friends while in the middle of a kindergarten classroom using the same language that you would do late into the night of a rave. At least, I hope you don’t!

Shortcuts: Content Moderation: Voting | Voting Results | How It Works

But content that one person feels should be censured is often something that another person views as part of the rough-edged give-and-take of contemporary debate. The challenge of our times is to create a content moderation system that respects the freedom to disagree without also allowing comments and responses that break down a community and cause upset and fear. Through a consensus approach, Nextdoor actually does a good job with its moderation. I’m just on the first rung of the moderation ladder as a Review Team member, but here’s how it looks when I log in to Nextdoor…

CONTENT MODERATION ON NEXTDOOR

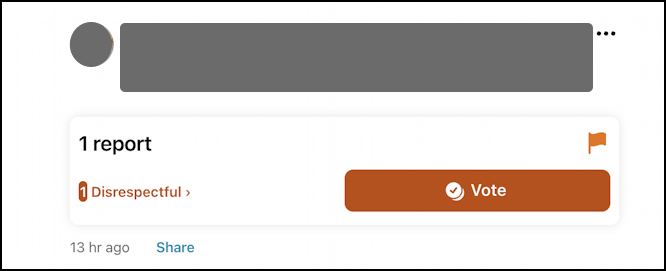

Like most social media sites, every time I log in there are notifications. The difference is that one of those inevitable notifications isn’t that someone I follow has posted or someone has engaged with something I’ve posted, it’s a notice that some content needs moderation. Flagged content is then shown within the discussion, making it easy to scroll up to see the original question or comment:

NOTE: I have masked the actual content I’ve been asked to vote on to respect the privacy of both the poster and the person who reported the content. This is true throughout this article.

Notice above that there’s one report; egregious content can receive multiple reports, though if there’s a steady stream of reports for something really awful, I think Nextdoor addresses it without us Review Team moderators ever being in the loop.

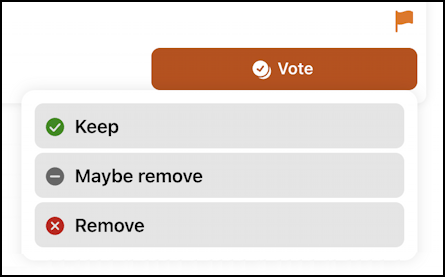

At this point, I generally scroll up to get a sense of the discussion and find out what the original post was about. When I’m ready, I click “Vote” and get these options:

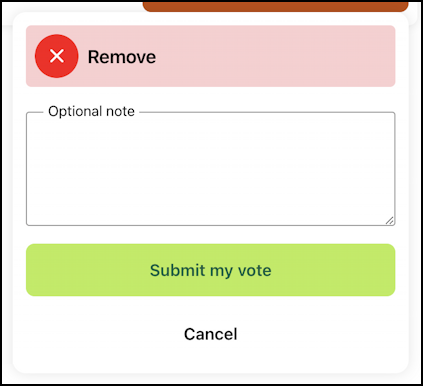

There’s a fourth option in addition to Keep, Maybe remove, and Remove: skip voting on it entirely. Since I can’t see that data point, I don’t know how many moderators choose that option. There are terms of service on Nextdoor, but I generally evaluate if they’re being overly personal in their attacks or violating the agreed-upon rules of my own community (disagree, but don’t be rude). A click on, say, “Remove”, and I then have a chance to add a comment if desired:

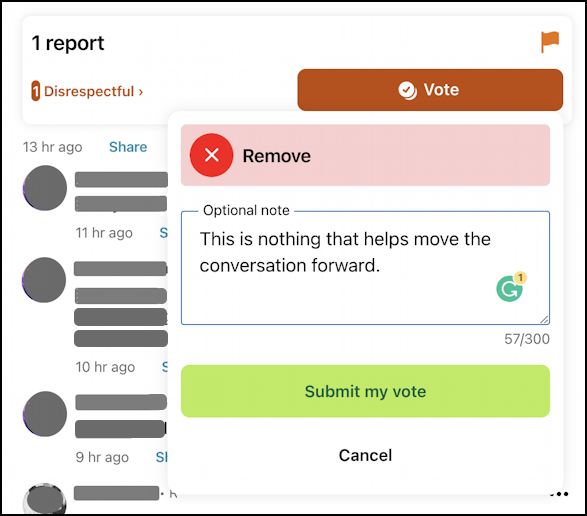

Mostly I don’t leave comments, but sometimes I like to explain my vote if I feel it’s going to be different to how most moderators evaluate this particular flagged content. Here’s what I added for this particular reported comment:

If you’re curious, this is the same basic rule I apply to comments on my Web sites, social media posts, and similar; are you contributing to a discussion or just dropping a bomb, hoping everything screeches to a stop? I am happy to see a lively debate and am fine if someone holds an entirely different perspective. But rude? Mean? Threatening? Using ad hominem attacks? That’s what gets these Remove votes from me.

SEEING THE VOTING RESULTS ON NEXTDOOR

Once I ‘Submit my vote” by pressing that green button, I then see a tally of how fellow Review Team members have voted on this particular content:

Looks like we’re 1 yes, 1 no so far. I can see more details – including who voted for what and who reported the content in the first place – by clicking on “See votes“:

Again, I’ve masked things for privacy, but you can see that 13 hours earlier someone reported the original comment as disrespectful, then almost immediately someone else on the Review Team voted to keep it. Me? I voted to remove it.

HOW DOES MODERATOR VOTING WORK ON NEXTDOOR?

This leads to the question of how everything’s tallied and counted on Nextdoor. To find out if it’s based purely on moderator votes, how many votes were needed, etc, I checked in with the company, mostly on the help page about Review Team moderation. On that page it details:

“When you vote on a piece of content that someone else has reported, the member will not be notified of your vote. If your vote triggers the removal of content, the author will be notified that their content has been removed, but they will not be informed about the identity of either the member(s) who made the report or of the Review Team members or Leads who removed their content.”

Privacy is a big issue with all of this because few people would want to be involved in the moderation process if they were going to be threatened and harassed by angry people who were posting inappropriate content on the site.

Privacy is a big issue with all of this because few people would want to be involved in the moderation process if they were going to be threatened and harassed by angry people who were posting inappropriate content on the site.

On the other hand, there is retaliatory reporting of content, where someone will go and report lots of comments from someone to whom they’ve taken a dislike; this is visible when benign comments show up in the moderation queue. It’s not a stretch to imagine two adversaries endlessly reporting each other’s comments as a way to try and harass them.

Fortunately, it’s not easy to be completely barred from Nextdoor, even if some of your posts are flagged. We Review Team moderators are not part of that decision process either, that’s part of the job of the actual paid content moderation team (a higher rung on that ladder I was talking about earlier). My impression is that you have to be pretty far out of line to get even a temporary ban, let alone being exorcised from the digital community.

Finally, there’s no specific number of votes that closes an item. When I asked the help team, they explained “There is no number or percentage. Reported content is evaluated based on local votes and automated systems.”

Now you know the experience of the first tier of content moderation. In a typical week I have maybe a dozen items to review and sometimes I just take a break and ignore the site because I have too much else going on or just don’t want to deal with the bickering and childish interaction. Being in the trenches, however, offers a lot of insight into the incredibly difficult job of trying to moderate user interaction in a society where the person espousing an opinion is apparently as valid a target as the opinion itself.

Pro Tip: I’ve been writing about technology, social media, and the digital community for many years. You can find a lot of my articles here in the Computer and Internet Basics area. Check it out, there might well be more that will pique your interest.

I would like to know WHY I receive notifications from NextDoor DAYS after someone posted something. Wow — it could be something that is a warning, but obviously, days later it is too late.

When I click on something that takes me to NextDoor, I may scroll down the page and see postings from very close to my neighborhood but I never received any email about it. Maybe there’s something I can do about my notifications? If so, I’m not aware.

Hello,

Do you notice any biases especially when local politics come into play? If so, how do you structure your decisions in regard to those biases?

An interesting question, Marian. There are definitely biases both in the conversations and the moderation process, but I think it balances out. My own decisions are based on my views and biases, as are all moderators, but I am also passionate about freedom of speech, especially for views that are different than my own, so…

Hi! Thanks for your clear presentation. I have been inducted into the nextdoor review team recently. Just wondering if you have discovered more on how the automated logic works that finally determines acts on the consensus of the reviewers? Is it by simple majority? How many days it takes for the automated process to kick in and make final decision?

All of that information is pretty opaque at NextDoor, even to us community mods. I’d be interested in learning more too…